【云原生】本地搭建Openshift单机最小化开发环境

标签: 【云原生】本地搭建Openshift单机最小化开发环境 JavaScript博客 51CTO博客

2023-04-01 18:23:42 113浏览

文章目录

- 一、什么是CodeReady Container(CRC)?

- 二、本地化部署CodeReady Container

- 三、Code Ready Container的使用

公众号:

MCNU云原生,文章首发地,欢迎微信搜索关注,更多干货,第一时间掌握!

前文讲过Openshift有两个版本,一是企业版的OCP,二是社区版的OKD。OCP需要购买Redhat的license,不适合开发测试和试验环境,而OKD虽然可以支持开发测试环境,但是部署还是稍微复杂,对于一些只需要简单试验的场景仍然显得太重了。为此,redhat提供了单机版的openshift,名为CodeReady Container。

一、什么是CodeReady Container(CRC)?

CodeReady Containers 内置一个最小的、预配置的 OpenShift(包含kunernetes),只要你的笔记本或者台式计算机的配置稍微比较好,那么是可以轻松安装的,它提供了一个快速、简单的方式来在本地计算机上搭建一个容器化的开发环境,日常开发和测试是非常方便的。

CodeReady Containers 支持 Linux、macOS 和 Windows,依赖于虚拟化技术,所以需要在这些操作系统上再安装虚拟化软件,例如Linux上的Libvirt、macOS上的Hyperkit和windows上的HyperV。

CodeReady Containers可以帮助开发人员再本地快速创建、测试和部署应用程序,并在开发完成后将其轻松地部署到生产环境中的openshift container platform,无需在生产环境中进行任何更改,它还提供了一个易于使用的Web界面和命令行界面,使开发人员能够方便地管理其容器化的开发环境。

除了基本的应用程序部署和管理,CodeReady Containers还提供了许多其他功能,例如:

- 集成开发环境(IDE)支持

CodeReady Containers可以与一些流行的IDE(如Eclipse和VS Code)集成,开发人员可以在本地计算机上编写、测试和部署应用程序。

- 持续集成/持续部署(CI/CD)支持

CodeReady Containers支持各种CI/CD工具,如Jenkins和GitLab,开发人员可以在本地构建和测试应用程序,并将其轻松地部署到生产环境中。

二、本地化部署CodeReady Container

本地化部署CodeReady Container是一件比较轻松的事情,一切都趋向于自动化。下面以Linux(Centos 7)为例进行安装演示。

准备一台主机,配置至少如下:

- 4 个物理 CPU 内核

- 9 GB 可用内存

- 35 GB 的存储空间

另外创建一个专门用于crc运行的用户,因为crc的运行不能使用root用户,运行过程中会有提示。

首先从https://crc.dev/crc/下载crc版本,注意arm64的架构暂时不支持,请查看自己系统的架构,这里下载linx-amd64架构的安装包。

解压,并将crc放到系统PATH目录

[root@node1 openshift]# tar zxf crc-linux-amd64.tar.xz

[root@node1 crc-linux-2.15.0-amd64]# mv crc /usr/local/bin/查看crc版本,从这里可以看到除了openshift,默认还安装了Podman,因为openshift默认将podman作为其容器运行时。

[root@node1 crc-linux-2.15.0-amd64]# crc version

CRC version: 2.15.0+cc05160

OpenShift version: 4.12.5

Podman version: 4.3.1进行环境配置,CodeReady使用crc setup进行环境配置,它会检查必须的服务是否已经正确进行了安装并且正常运行,例如:

[k8s@node1 ~]$ crc setup

INFO Using bundle path /home/k8s/.crc/cache/crc_libvirt_4.12.5_amd64.crcbundle

INFO Checking if running as non-root

INFO Checking if running inside WSL2

INFO Checking if crc-admin-helper executable is cached

INFO Caching crc-admin-helper executable

INFO Using root access: Changing ownership of /home/k8s/.crc/bin/crc-admin-helper-linux

INFO Using root access: Setting suid for /home/k8s/.crc/bin/crc-admin-helper-linux

INFO Checking if running on a supported CPU architecture

INFO Checking minimum RAM requirements

INFO Checking if crc executable symlink exists

INFO Creating symlink for crc executable

INFO Checking if Virtualization is enabled

INFO Checking if KVM is enabled

INFO Checking if libvirt is installed

INFO Checking if user is part of libvirt group

INFO Adding user to libvirt group

INFO Using root access: Adding user to the libvirt group

INFO Checking if active user/process is currently part of the libvirt group

INFO Checking if libvirt daemon is running

INFO Checking if a supported libvirt version is installed

INFO Checking if crc-driver-libvirt is installed

INFO Installing crc-driver-libvirt

INFO Checking if systemd-networkd is running

INFO Checking if NetworkManager is installed

INFO Checking if NetworkManager service is running

NetworkManager is required. Please make sure it is installed and running manually提示NetworkManager没有正常运行,我们可以查看NetworkManager的状态,发现确实处于inactive。

[k8s@node1 ~]$ sudo systemctl status NetworkManager

● NetworkManager.service - Network Manager

Loaded: loaded (/usr/lib/systemd/system/NetworkManager.service; disabled; vendor preset: enabled)

Active: inactive (dead)

Docs: man:NetworkManager(8)启动NetworkManager

[k8s@node1 ~]$ sudo systemctl status NetworkManager重新进行setup,发现已经设置成功

[k8s@node1 ~]$ crc setup

INFO Using bundle path /home/k8s/.crc/cache/crc_libvirt_4.12.5_amd64.crcbundle

INFO Checking if running as non-root

INFO Checking if running inside WSL2

INFO Checking if crc-admin-helper executable is cached

INFO Checking if running on a supported CPU architecture

INFO Checking minimum RAM requirements

INFO Checking if crc executable symlink exists

INFO Checking if Virtualization is enabled

INFO Checking if KVM is enabled

INFO Checking if libvirt is installed

INFO Checking if user is part of libvirt group

INFO Checking if active user/process is currently part of the libvirt group

INFO Checking if libvirt daemon is running

INFO Checking if a supported libvirt version is installed

INFO Checking if crc-driver-libvirt is installed

INFO Checking if systemd-networkd is running

INFO Checking if NetworkManager is installed

INFO Checking if NetworkManager service is running

INFO Checking if /etc/NetworkManager/conf.d/crc-nm-dnsmasq.conf exists

INFO Checking if /etc/NetworkManager/dnsmasq.d/crc.conf exists

INFO Checking if libvirt 'crc' network is available

INFO Checking if libvirt 'crc' network is active

INFO Checking if CRC bundle is extracted in '$HOME/.crc'

INFO Checking if /home/k8s/.crc/cache/crc_libvirt_4.12.5_amd64.crcbundle exists

INFO Getting bundle for the CRC executable

INFO Downloading bundle: /home/k8s/.crc/cache/crc_libvirt_4.12.5_amd64.crcbundle...

0 B / 2.98 GiB [________________________________________________________________________________________________________________________] 0.00% ? p/s

INFO Uncompressing /home/k8s/.crc/cache/crc_libvirt_4.12.5_amd64.crcbundle

crc.qcow2: 11.82 GiB / 11.82 GiB [---------------------------------------------------------------------------------------------------------] 100.00%

oc: 124.65 MiB / 124.65 MiB [--------------------------------------------------------------------------------------------------------------] 100.00%

Your system is correctly setup for using CRC. Use 'crc start' to start the instance使用crc start即可启动CodeReady Container。另外启动过程中需要输入Pull Secret,用户从redhat上下载相关内容,这需要注册redhat的账号,在https://console.redhat.com/openshift/create/local上进行下载,如下图所示:

启动crc,Pull Secret可以在启动过程中输入,也可以通过-p pull-secret.json指定。以下启动成功,在最后输出了web console访问地址、账号密码和oc命令用法。

[k8s@node1 ~]$ crc start

INFO Checking if running as non-root

INFO Checking if running inside WSL2

INFO Checking if crc-admin-helper executable is cached

INFO Checking if running on a supported CPU architecture

INFO Checking minimum RAM requirements

INFO Checking if crc executable symlink exists

INFO Checking if Virtualization is enabled

INFO Checking if KVM is enabled

INFO Checking if libvirt is installed

INFO Checking if user is part of libvirt group

INFO Checking if active user/process is currently part of the libvirt group

INFO Checking if libvirt daemon is running

INFO Checking if a supported libvirt version is installed

INFO Checking if crc-driver-libvirt is installed

INFO Checking if systemd-networkd is running

INFO Checking if NetworkManager is installed

INFO Checking if NetworkManager service is running

INFO Checking if /etc/NetworkManager/conf.d/crc-nm-dnsmasq.conf exists

INFO Checking if /etc/NetworkManager/dnsmasq.d/crc.conf exists

INFO Checking if libvirt 'crc' network is available

INFO Checking if libvirt 'crc' network is active

INFO Loading bundle: crc_libvirt_4.12.5_amd64...

CRC requires a pull secret to download content from Red Hat.

You can copy it from the Pull Secret section of https://console.redhat.com/openshift/create/local.

? Please enter the pull secret **********************************************************************************************************************

WARN Cannot add pull secret to keyring: exec: "dbus-launch": executable file not found in $PATH

INFO Creating CRC VM for openshift 4.12.5...

INFO Generating new SSH key pair...

INFO Generating new password for the kubeadmin user

INFO Starting CRC VM for openshift 4.12.5...

INFO CRC instance is running with IP 192.168.130.11

INFO CRC VM is running

INFO Updating authorized keys...

INFO Check internal and public DNS query...

INFO Check DNS query from host...

WARN Wildcard DNS resolution for apps-crc.testing does not appear to be working

INFO Verifying validity of the kubelet certificates...

INFO Starting kubelet service

INFO Waiting for kube-apiserver availability... [takes around 2min]

INFO Adding user's pull secret to the cluster...

INFO Updating SSH key to machine config resource...

INFO Waiting until the user's pull secret is written to the instance disk...

INFO Changing the password for the kubeadmin user

INFO Updating cluster ID...

INFO Updating root CA cert to admin-kubeconfig-client-ca configmap...

INFO Starting openshift instance... [waiting for the cluster to stabilize]

INFO 5 operators are progressing: image-registry, kube-storage-version-migrator, network, openshift-controller-manager, service-ca

INFO 5 operators are progressing: image-registry, kube-storage-version-migrator, network, openshift-controller-manager, service-ca

INFO 5 operators are progressing: image-registry, kube-storage-version-migrator, network, openshift-controller-manager, service-ca

INFO 5 operators are progressing: image-registry, kube-storage-version-migrator, network, openshift-controller-manager, service-ca

INFO 4 operators are progressing: image-registry, kube-storage-version-migrator, openshift-controller-manager, service-ca

INFO 3 operators are progressing: image-registry, openshift-controller-manager, service-ca

INFO 2 operators are progressing: image-registry, openshift-controller-manager

INFO 2 operators are progressing: image-registry, openshift-controller-manager

INFO All operators are available. Ensuring stability...

INFO Operators are stable (2/3)...

INFO Operators are stable (3/3)...

INFO Adding crc-admin and crc-developer contexts to kubeconfig...

Started the OpenShift cluster.

The server is accessible via web console at:

https://console-openshift-console.apps-crc.testing

Log in as administrator:

Username: kubeadmin

Password: ereDn-BUtfV-HF37v-cDJvu

Log in as user:

Username: developer

Password: developer

Use the 'oc' command line interface:

$ eval $(crc oc-env)

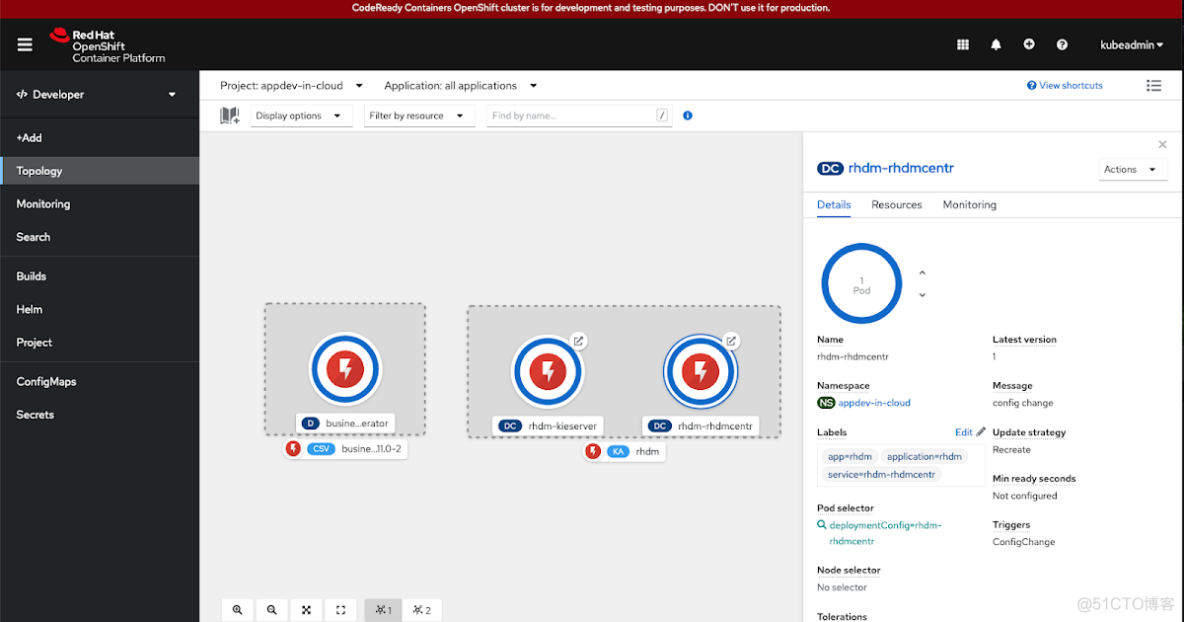

$ oc login -u developer https://api.crc.testing:6443到这里安装基本上就结束了,可以使用web console进行访问也可以使用oc命令进行操作。

web console浏览器访问地址为:https://console-openshift-console.apps-crc.testing/,oc访问登录地址为https://api.crc.testing:6443。

这些地址都是crc安装以后生成的地址,实际上要依靠DNS进行解析,默认情况下是解析到crc运行的虚拟机的地址,一般IP是:192.168.130.11,可以使用crc ip命令获取到。

此时查看/etc/hosts能够看到对应的解析关系:

[k8s@node1 ~]$ cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.130.11 api.crc.testing canary-openshift-ingress-canary.apps-crc.testing console-openshift-console.apps-crc.testing default-route-openshift-image-registry.apps-crc.testing downloads-openshift-console.apps-crc.testing oauth-openshift.apps-crc.testing使用crc ip获取到的是运行crc的KVM虚拟机的IP,该IP地址是KVM使用的地址,只能在宿主机上访问到,无法直接访问此IP,如果需要在宿主机中访问,可以通过安装haproxy转发。

安装haproxy

[k8s@node1 ~]$ sudo yum install -y haproxy配置crc ip环境变量,备份haproxy配置文件

[k8s@node1 haproxy]$ export CRC_IP=$(crc ip)

[k8s@node1 haproxy]$ cp haproxy.cfg haproxy.cfg.backup在haproxy.cfg配置文件中增加以下内容,配置haproxy的转发

global

debug

defaults

log global

mode http

timeout connect 5000

timeout client 500000

timeout server 500000

frontend apps

bind 0.0.0.0:80

option tcplog

mode tcp

default_backend apps

backend apps

mode tcp

balance roundrobin

server webserver1 ${CRC_IP}:80 check

frontend apps_ssl

bind 0.0.0.0:443

option tcplog

mode tcp

default_backend apps_ssl

backend apps_ssl

mode tcp

balance roundrobin

option ssl-hello-chk

server webserver1 ${CRC_IP}:443 check

frontend api

bind 0.0.0.0:6443

option tcplog

mode tcp

default_backend api

backend api

mode tcp

balance roundrobin

option ssl-hello-chk

server webserver1 ${CRC_IP}:6443 checkd重启haproxy

$ sudo systemctl restart haproxy

$ sudo systemctl status haproxy三、Code Ready Container的使用

crc界面非常友好,一般使用不存在困难,这里主要讲一下使用命令行操作crc的场景。

查看crc运行状态

crc status停止crc

crc stop启动crc

crc start获取集群所有节点

oc get nodes查看某个节点的详细信息

oc describe node node-name登录

[k8s@node1 ~]$ oc login -u kubeadmin -p ereDn-BUtfV-HF37v-cDJvu https://api.crc.testing:6443

Login successful.

You have access to 66 projects, the list has been suppressed. You can list all projects with 'oc projects'

Using project "default".

[k8s@node1 ~]$ oc projects

You have access to the following projects and can switch between them with ' project <projectname>':创建新项目

[k8s@node1 ~]$ oc new-project <project-name>查看项目列表

[k8s@node1 ~]$ oc projects

You have access to the following projects and can switch between them with ' project <projectname>':

* default

hostpath-provisioner

kube-node-lease

kube-public

kube-system

openshift

openshift-apiserver

openshift-apiserver-operator

openshift-authentication切换到某个项目

[k8s@node1 ~]$ oc project openshift-apiserver

Now using project "openshift-apiserver" on server "https://api.crc.testing:6443".查看project的信息

oc describe project projectname部署应用程序

将一个容器镜像部署到当前选中的项目中。

oc new-app <container-image>查看部署POD状态

oc get pods创建访问路由

在openshift中要从外部访问应用程序需要通过route,以下命令可以创建一个route

oc expose svc/<service-name>获取路由

oc get routeoc命令还有非常多,对应不同的功能,这里只列举了部分最常用的,其他的可以参考官网,就不一一详述了。

好博客就要一起分享哦!分享海报

此处可发布评论

评论(0)展开评论

展开评论